Despite being a bit overwhelmed lately, I wasn’t about to pass up on the chance to take a week-long spin in another Alfa Romeo. Before, it was Alfa’s hot rod sedan. This time, I was able to get seat time in their SUV, an Alfa Romeo Stelvio Ti.

Video

As with last time, I’ve put together a short video about the Stelvio. I’m pretty pleased with how this turned out; I’ve (mostly) sorted out my audio issues and have learned some new tricks. Just like last time, I’m happy to hear any constructive feedback that you may have, if you’re intersted in sharing.

An Alfa Romeo… SUV?

Indeed. Take the Giulia that I tested a few months ago, lift it up, and hope for the best. Much to my surprise, most of these hopes were answered; the Stelvio was a stunningly passionate drive for what it was. SUVs are not supposed to be this, well, zippy.

The Stelvio’s ~300 HP would be a lot for a sedan, but doesn’t seem particularly overwhelming for a heavy, all wheel drive SUV. However, once the Stelvio’s turbocharger started to make boost, it was surprisingly adept at getting out of its own way.

Most surprisingly was the Stelvio’s sure-footedness on the road. Upon closer inspection, I shouldn’t have been so surprised. The Alfa Romeo isn’t the only thing named Stelvio: it bears the name of the Stelvio Pass. Avid Top Gear nerds may be scratching their heads right now, as this should sound familiar. The Stelvio Pass was — 🚨 SPOILER ALERT 🚨 — named by Top Gear as the best driving road in the world.

The Stelvio handled extraordinarily well given its height. I never felt like the center of gravity was at waist level, as I do in most other SUVs I’ve driven. In the Stelvio, I felt like the center of gravity was at the floorboard at worst. Body roll was virtually nonexistant.

All of this led to the Stelvio being a very confident drive. Generally speaking, I was only ever-so-rarely reminded that I was driving a tall SUV; left to its own devices my brain’s autopilot computed turn-in speeds roughly equivalent to my BMW sedan.

One of the slogans for the Stelvio is The SUV for the S-Curves. As 🙄 eye-roll-inducing 🙄 as that may be, it proved to be absolutely true.

Interior

The interior in so many ways felt extremely similar. There were a few small changes on the inside, some of which were really wonderful.

Most notably the substitution of a truly lovely dark oak trim in lieu of the standard-issue hot-rod carbon fiber from the Giulia Quadrifoglio. I can’t speak highly enough of this oak trim; it lent an air of sophistication to the Stelvio that a traditional dark wood trim would not have. Perhaps this is my bias against brown woods showing, but that oak was my favorite interior trim ever.

Additionally, the panoramic sunroof was nice. I dearly missed having a sunroof in the Giulia Quadrifoglio. Many of my friends prefer “slicktops”, but to me, a car without a sunroof is not a car I want to own. The Giulia got a pass thanks to its tremendous drivetrain; the Stelvio would not have.

Thankfully, the sunroof is there, and it’s huge. I was surprised by how little it would actually open — most of the sunroof area was non-moving glass. However, it was enough, and the powered sunshade was a really lovely compromise of light-permitting but not light-emitting.

Second only to the oak trim, I also really loved that there was a smartphone “holster” between the cup holders. You can see this in my video, and it’s one of those great ideas that’s obvious in retrospect. It’s frustrating in my car that I don’t have any particularly good place to lay my phone; the upright holster between the cupholders in the Stelvio was perfect, and damn clever.

Well Actually

The as-tested price for my borrowed Stelvio was around $55,000. Not an entirely unreasonable sum for a modern, zippy, European SUV. However, there were some features that I felt should absolutely have been in this price bracket, but were missing:

-

CarPlay and/or Android Auto

(I should note it appears these are coming very soon) -

A birds-eye-view parking camera

-

Autonomous driving features

-

Remote control via smartphone app

(Though the Stelvio did have a remote start button on its keyfob)

Some of these features can be had in entry-level economy cars for little-to-no extra money. To see them missing from a car that’s north of $50k is more than a bit frustrating. Perhaps not a do-or-die issue, but it was enough to give me pause.

Conclusion

I’m not a SUV kind of guy. I like my cars low, fast, and reasonably small. The Stelvio, however, was very much the spiritual equivalent of that very set of traits. It was not huge, but not small. It was quicker than expected, if not outright fast. It handled stunningly well for a car as tall as it is.

So who is the Stelvio for? To my eyes, it’s a great car for someone who would generally consider a BMW X3, but wants something, well, interesting. Perhaps you fancy yourself a connoisseur. Or maybe you just like to think different. Assuming modern Alfa Romeo can put behind the reliability gremlins of its past, the Stelvio is certainly an interesting choice, if nothing else.

A little over three years ago, I wrote about the moment when Declan was placed on Erin’s chest for skin-to-skin, shortly after his birth. In one very lucky picture, I was able to capture the most pure expression of euphoria on Erin’s face.

Then, we were first-time parents. Now, we’re new parents again; this time, because of Mikaela. Though we’re not first-time parents, we’re now first-time parents of siblings.

I had been excited for Declan to meet Mikaela for months before she was born. I didn’t know what was going to happen, but I had a feeling it was going to be pretty special.

Erin took this shot of me wiping away tears as I watched Declan hold Mikaela for the very first time. Much like Erin when Declan was placed on her three years ago, this was my moment. This was my euphoria.

Parenting a toddler and an infant is already proving to be challenging in a litany of ways neither of us really expected. It’s already exhausting. Nonetheless, we are extremely lucky. We have had two children — both through absolute miracles of science — after assuming that we would never be able to have even one. Even when it’s frustrating, life is good. In this particular moment, life was amazing.

Mikaela Charlotte Liss. Born very early on Thursday morning. She weighed in at 9 pounds, 10.5 ounces and was 21 inches long.

Mom and Mikaela are doing well. Her older brother is still feeling things out, but has been considerably more positive than not.

I’m fulfilled. Somewhere deep down, even after Declan, I knew our little family wasn’t quite complete. Now that Mikaela is here, I am — and we all are — whole.

This week I returned to Clockwise, joining Lory Gil, Dan Moren and Mikah Sargent. We discussed wishes for Apple for 2018, personal tech stories from the holidays, apps that delight us, and running virtual machines.

If you’ve somehow missed out on Clockwise, you’re doing yourself a disservice. Clockwise is a fun and fast-paced show, that never lasts more than a half hour. Even the most busy podcast listening schedules can — and should! — be able to squeeze Clockwise in.

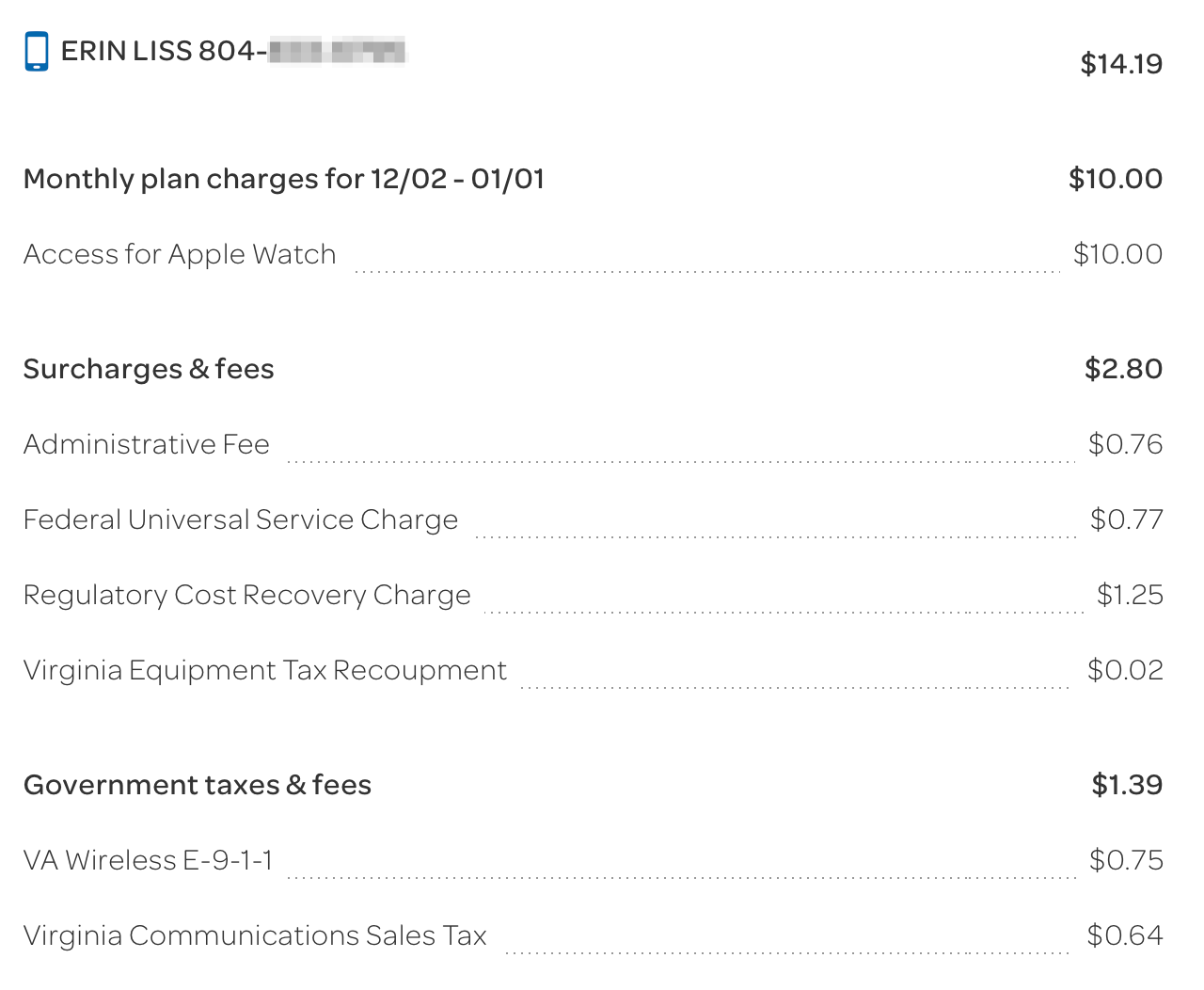

When the Apple Watch Series 3 with LTE was first announced, the LTE service was advertised as “10 dollars per month”. This has turned out to be, well, sorta true. At least, on AT&T.

The first full month of Apple Watch ownership, I looked at my bill and noticed—to my horror—that the “$10 per month” was not inclusive of fees and charges. Since the Watch has its own cellular line, it has a plethora of these fees. So many of them, in fact, that my first full month’s bill was $17. I lamented this on ATP (Overcast timestamp link) when it happened.

I didn’t want to make too big a stink about it, because it was the first full month’s bill, and I wasn’t sure if it was really indicative of the true cost. Having waited a few months now, the last couple of watch bills have indeed leveled out.

AT&T charges me $14.19 per month to have LTE service on my Apple Watch in Virginia.

Here’s the relevant excerpt of my bill:

This is really preposterous. My understanding is that other carriers, such as the self-proclaimed “uncarrier”, T-Mobile, charges exactly $10/month. It would appear they just take earnings on whatever is remaining of the $10 after taxes and fees. Naturally, the customer-hostile AT&T does no such thing.

These unspoken-for fees really piss me off, and though I understand that these are AT&T’s fault, it’s hard not to feel like Apple sold me a bill of goods but didn’t end up really delivering. Having re-watched the keynote, Apple was smart enough not to say anything specific about pricing during the event, but the dim-witted consumer in me didn’t remember it that way.

I friggin love my Apple Watch Series 3, and I friggin love going for jogs with just my Watch and AirPods. It’s really game changing. But it does chap my ass seeing this AT&T bill every single month.

The Alfa Romeo Giulia is, in some ways, a moonshot by Alfa Romeo. For Americans, we haven’t seen an Alfa Romeo sedan in a very long time. The Alfa Romeos that we have seen have been coupes or convertibles, and they have been very rare. The Giulia—and the Stelvio—are Alfa Romeo’s attempts to recapture the American market.

Thanks to a friend, I was able to get seat time for a week in a 2017 Alfa Romeo Giulia Quadrifoglio. The experience of getting a press car is likely going to be a blog post in and of itself, but for today, I want to talk about the car.

Video

If you’d like to hear and see me talk about the car, please check out my first car review video on YouTube:

This is my first effort at doing, well, anything like this. The video has some areas for improvement, but I’m proud of it. I hope it comes across as a great starting point for something big, because that’s what I think it is.

If you’d like to give me feedback about the video, please contact me.

What is this?

The Giulia, in general, is in many ways a competitor to my current car: the BMW 3-series. Though it felt a bit larger than my E90, it definitely felt as though it was in the same segment. Interestingly, much like the 3-series, the Giulia has quite a broad reach. The “beater” model is $38k, which is aspirational but attainable for many people. The range continues all the way up to the car I tested, the Quadrifoglio, which starts at almost $75k.

The car I drove is in many ways a BMW M3 competitor. An M3 competitor with a 500+ HP twin-turbo V6 that is—by all reports—sourced from the Ferrari California. In many ways, the Giulia Quadrifoglio is not shy about the notion that it’s chasing, and possibly catching, the M3.

The Inside

So much of the Giulia felt eerily familiar to me. As has been said, great artists steal, and Italy is nothing if not a country of great artists. In this case, the interior designer clearly stole from BMW. To my admittedly biased eyes, that’s a great place to steal from.

The infotainment was a knockoff of BMW’s iDrive. It wasn’t as nice, nor as intuitive, but it was nice enough and intuitive enough to keep me from getting infuriated. In fact, the only thing that really drove me bananas was being compelled to manually re-enter Bluetooth audio mode (to listen to audio from my iPhone) every time the car was started.

Otherwise, the interior was nice. The build quality felt great and easily on par with its German neighbors. Switchgear felt great and was obviously located.

There was some Italian flair, of course. The steering wheel is flat-bottomed. The start/stop button is on the wheel, rather than on the console. The turn signals would return to center—much like BMW—but could only be canceled by pushing the opposite direction. (My car lets you cancel by re-engaging the signal in either direction.) Even at the end of the week, it infuriated me.

Nevertheless, cars aren’t really about their interior, and the Quadrifoglio is definitely not. It’s about being on the road.

The Drive

Driving the Giulia was an interesting experience, because it was driving

three four different cars all at once. The Quadrifoglio has a drive

mode selector—as most cars of this caliber—that carries the

cutsey labels of DNA:

- Dynamic

Sporty but still approachable - Natural

Normal/balanced driving - Advanced Efficiency

In case you’re feeling frugal in your twin-turbo V6

Additionally, there is a fourth mode available:

- Race

Hold onto your butts

When Race mode is engaged, the exhaust gets louder, and has a different pitch. Stability control is turned off. Traction control is turned off. The car is 100% raw, and is 130% ready to murder you. There is no way to turn traction nor stability control back on. When you’re in Race mode, there is no turning back.

One thing I wished so badly was for the car to have the ability to use the Race mode exhaust, throttle response, engine timing, and everything else, but not disable traction nor stability control. I know my skills, and they left me wanting. I need a parachute.

However, the Quadrifoglio in full attack mode is vicious. The shifts are nearly instant, and the response is too instant for a forced induction car, unless you’re really low in the rev range. Whenever I hit redline, the car felt like it had much more to give. It was champing at the bit, begging for more.

500+ HP will do that to you.

Furthermore, despite the car having one of the much-maligned electric power steering systems, I actually couldn’t tell. During the time I had the Giulia, before I looked up what kind of power steering it used, I wasn’t sure by feel alone if it was hydraulic or electric. Thinking about it, that’s about the biggest compliment I can pay the steering.

But let’s take a step back:

The shifts are nearly instant

Why… why are the shifts something that’s happening to me? Are you telling me this car isn’t a three-pedal car‽

The ZF 8-speed

This car absolutely has an Achilles heel, and it is the transmission.

Or does it?

I’ve been a devout driver of standard transmission cars for my entire life. I don’t want to buy anything else, ever. I hate relinquishing the control of the car to a computer. I want to be the one that decides when to shift. I want to be the one that is challenged to shift effectively, quickly, and smoothly. I want it to be all about me.

The Giulia is powered by a ZF 8-speed automatic transmission. Not a dual clutch, but a true, torque-converter-included, automatic.

Stop the review, I’m out.

Except, maybe not.

I had heard many, many times in the past that the ZF 8-speed, used in many modern BMWs among others, is really good. Like, really good. I remained skeptical, though. Give me a clutch pedal—even a computer-operated one—or give me death.

After a week with the Giulia, I’m not so sure I was right.

The Giulia Quadrifoglio is a stunningly, stunningly fun car to drive. And though

I occasionally missed rowing my own, often times, just wrenching back on the

+ paddle gave me nearly the exact same feeling. Sure, there are few things in

the world that can match a perfect, heel-toe, rev-matched downshift. But holy

hell, hearing and feeling this thing rip through the gears, pulling on the paddles

like the leash on a rabid dog, was amazing fun.

Which gave me pause.

Second Thoughts

My entire life, I’ve defined myself in many ways, not the least of which is my

preference insistence on driving a manual transmission. I sneered at all

the old mustachioed men in their automatic Corvettes, buying a car that was

ostensibly about performance, but in reality about showing off. I snickered

every time I saw a DCT M3 outside of the track, attributing the choice to

laziness or ineptitude rather than performance.

Now, I’m not sure what to think.

I had so much fun in the Quadrifoglio. More fun than I ever expected. To be sure, I expected to have quite a lot of fun. But as it turns out, a properly tuned automatic, which favors locking itself up as often as possible, can get me 80% or 90% of the way to perfection. In fact, it can make me happy in ways a stick can’t, particularly in traffic.

The Giulia Quadrifoglio made me rethink what I thought about transmissions.

What I thought about cars.

What I thought, and think, about myself.

Which is a hell of a lot to get out of a week with a hunk of metal.

The wonderful organization, App Camp for Girls, has been interviewing various people in the field as part of their “Fireside Chat” series. My interview was just posted, and I’m happy with how it turned out.

There’s a ton of great interviews; be prepared to lose a couple hours to all of them.

Also worth noting, App Camp for Girls is currently raising money to expand to three more cities by 2020. App Camp’s mission is to promote gender equality in technology, which is something everyone should be able to throw their weight (and money) behind.

If you have even a dollar to spare, please support AC4G. I have.

Though I haven’t talked about it here on the blog, I was lucky enough to get a week of seat time in a really nice car. That seat time was arranged by Sam Abuelsamid of Wheel Bearings.

When we set out to do Neutral, what we hoped to be creating was what Wheel Bearings actually is. Informed hosts, with diverse opinions, talking about all things automotive. Between Sam and Dan Roth, there is not only tremendous knowledge (which we lacked) and access to press cars (which we lacked).

On this episode of Wheel Bearings, we discuss the Alfa that I borrowed, and I pick a fight about Volvo’s Sensus infotainment system. When Dan and Sam could get a word in edgewise, they discussed the cars they’ve been driving recently, as well as Sam’s experience in an automated vehicle.

Wheel Bearings is a great show you should always listen to, and I like to think this is a great introductory episode.

I’m a day late for my Thankful Thursday “thing I like” post, but yesterday

was Erin’s birthday, so, I’m giving myself a bye. Keeping in the spirit of

Erin, I’d like to talk about something I really love about her new car.

When we took delivery of Erin’s Volvo XC90, we were presented with this box:

I’ve never seen nor heard of keys coming in a fancy box like this, so immediately I was impressed. Everyone likes to feel special, and I felt like we were getting the white glove treatment. By comparison, though I bought my BMW used, I’d never heard of BMW keys coming in a fancy box like that.

However, the box isn’t what I want to talk about. I want to talk about that little piece of plastic that’s hiding in the right-hand side of the box. I took out the other full-size key so you could see it. The thing I like this week is the Volvo “Key Tag”.

Erin’s XC90 has a proximity key, which it refers to as a “Passive Entry” and “Passive Start” system. In BMW nomenclature, it’s a part of “Comfort Access”. Regardless of the marketing name, the… well… key here is that you don’t need to ever touch the car key to enter and start the car. For someone who keeps his keys in his pocket, this is a fantastic feature that now I’m not sure I can live without. I can only imagine how convenient this is for someone like Erin who keps her keys in her purse.

In order to open either of our cars, we simply need to grab the door handles, as long as the key is on our person. Once we get in, we can turn the car on without touching the key. When we exit, we keep the key on us, and touch a special part of the door handle to lock the car. This largely obviates the traditional remote lock/unlock features of our car keys. I almost never use my car key to lock or unlock my car remotely; I only do so by grabbing the door handle.[1]

When it came time to claim one of Erin’s keys as my own, I immediately knew which one I wanted: the Key Tag.

Erin’s Volvo came with two traditional—and large—keys that have all the buttons you’re used to: lock, unlock, trunk release, and a panic button. But the Key Tag is the thing I like for this week.

The Key Tag also works as a proximity key, but doesn’t have any buttons on it. By not having any buttons on it, that means the Key Tag can be much much smaller than the full-size keys with remote lock/unlock. For someone who carries his keys in his pocket, that makes a world of difference. Further, the Key Tag is totally sealed, which means it’s also waterproof.

Since Erin wanted to carry a full-size key in her purse, I got my choice of either the other full-size key, or the Key Tag. Without hesitation, I chose the Key Tag. Even if this were primarily my car, I’d almost certainly[2] still choose to carry the Key Tag over one of the traditional keys. Having that much less in my pocket is fantastic, and I wish there were an equivalent for my car.

There’s a lot to like about Erin’s car, some of which I may talk about in the future, but the seemingly simple Key Tag may be my favorite.

Before I get a bunch of "Well, actually"s, the one exception to this rule is that I do occasionally roll down all my windows from afar using my car key. This is not possible by physical contact with the car; only by holding the unlock button on the remote. ↩

The only problem with the Key Tag is that it doesn’t have a traditional “key blade” inside it, should the car’s electronics have a fault. Thus, if I were by myself with Erin’s car, and the battery died, I wouldn’t be able to get in the car to open the hood. That may cause me to carry the large key if it were my primary car, but as an at most occasional driver, I’m not too worried about it. ↩

For the last year and a half, I’ve been working full time as a Swift developer. I love Swift, and I’ve also been really enjoying diving into Functional Reactive Programming using RxSwift. Nevertheless, I find myself longing for something that I don’t have anymore: a robust introspection API.

When I was writing C#, I could write a simple class like this:

public class Person

{

public string FirstName { get; set; }

public string LastName { get; set; }

}

Now let’s say I want to send or receive a Person from a RESTful API.

Perhaps the transmission will be JSON, and it will look something like

this:

{

"fn": "Casey",

"ln": "Liss"

}

If we wanted to map a C# Person from a JSON dictionary, it’s reasonably

straightforward, except that there’s an important discrepancy: we need

to tie fn to FirstName and ln to LastName.

There’s a ton of ways one can create a link between those different names.

My favorite, in C#, was to use introspection. In C#, this is called

Reflection, and that’s how I still refer to it to this day.

Reflection is neat because it allows my code to learn about itself. That means

I can add some metadata to my code—I can annotate it—in order

to provide some supplementary information. How does that work in practice? Let’s

augment the Person class, adding and leveraging a new Attribute.

public class JsonKeyAttribute: Attribute

{

public string Key { get; set; }

public JsonKeyAttribute(string key)

{

this.Key = key;

}

}

public class Person

{

[JsonKey("fn")]

public string FirstName { get; set; }

[JsonKey("ln")]

public string LastName { get; set; }

}

Now we are annotating our properties with new metadata: we’re storing the keys

needed to translate to/from JSON right there inline. How do we leverage it? Let’s

write a static factory method:

public class Person

{

[JsonKey("fn")]

public string FirstName { get; set; }

[JsonKey("ln")]

public string LastName { get; set; }

public static Person FromJson(string jsonString)

{

var retVal = new Person();

var properties = typeof(Person).GetProperties();

foreach (var property in properties)

{

var attribs = property.GetCustomAttributes(typeof(JsonKeyAttribute), true);

var attrib = attribs.FirstOrDefault() as JsonKeyAttribute;

if (attrib != null)

{

var key = attrib.Key;

var value = // Get value from JSON object

// using the key we just discovered.

// Set the property's value

property.SetValue(retVal, value);

}

}

return retVal;

}

}

Admittedly I’ve fluffed over the conversion from JSON string to something

meaningful, as well as glossing over extracting "Casey" and "Liss" from the

JSON. However, the rest of the code is the point. We can leverage Reflection to

look at the Person class and see what the key is for each of its properties.

Having the ability to annotate our code with information about itself is super

powerful. Using an Attribute, we were able to leave information about how to

convert between different representations of the same data right in the class

that needs to know about it. Some purists say that’s a poor separation of

concerns; to me, that’s improving local reasoning.

Furthermore, using annotations can accomplish interesting things in arguably far cleaner ways.

As an example, if you want to specify a “pretty printer” for the purposes of

debugging a Swift class, you can use CustomDebugStringConvertible.

However, to do so, you must implement the protocol. For example:

struct Person {

var firstName: String

var lastName: String

}

extension Person: CustomDebugStringConvertible {

var debugDescription: String {

return "\(firstName) \(lastName)"

}

}

The approximate equivalent in C# is arguably cleaner, because it doesn’t

require implementing a new interface. Instead, you simply leverage the

DebuggerDisplayAttribute:

[DebuggerDisplay("{FirstName,nq} {LastName,nq}")]

public class Person

{

[JsonKey("fn")]

public string FirstName { get; set; }

[JsonKey("ln")]

public string LastName { get; set; }

}

I can think of a ton of other places where reflection is useful as well. I’m

particularly interested in how cool it could be to really open up the

already crazy-powerful Swift enums by adding the ability to annotate them,

or reflect over them. Oh, the crazy things I could do… 🤔

Reflection isn’t for everyone. In fact, I got the following email from an ATP listener:

If you need reflection to reason about and execute code runtime, your API is poorly designed. Can you please explain why you feel the the need for a reflection API?

That’s a pretty severe take-down.

I understand the sentiment, and this particular listener isn’t necessarily wrong. But what I love about reflection is that it opens up the possibility for a whole new way of solving problems. A way that I’ve found to be quite convenient from time to time.

In fact, all of you Objective-C developers out there may enjoy doing things like this on occasion:

id person = [[NSClassFromString("Person") alloc] init];

To me, that’s reflection.

A while back there was a big kerfuffle amongst some Objective-C developers who

were, erm, objecting to the lack of

dynamic

dynamic

features in Swift. To me, the canonical, level-headed post about this

was this wonderful post by my pal Brent Simmons. It’s an extremely

short but accurate summary of what all of the Objective-C folks seemed to think

Swift was lacking.

features in Swift. To me, the canonical, level-headed post about this

was this wonderful post by my pal Brent Simmons. It’s an extremely

short but accurate summary of what all of the Objective-C folks seemed to think

Swift was lacking.

To me, I can summarize his post in one word: reflection.